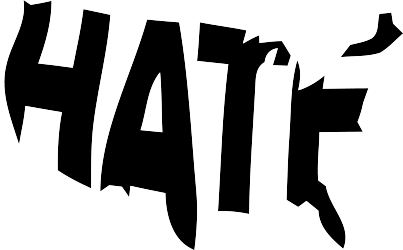

I have had the pleasure of running a lifestyle brand that creates and sells clothing and other merchandise rooted in thoughtfulness, social consciousness, and the lived pains and experiences of marginalized people. And it might be because of this that I encounter myself in a paradox.

I’m writing today from a place I never seek out, but one that finds me anyway, just often enough to sting every time. That place is seller’s remorse. Like the first bite into a fresh grapefruit, the tangy sweetness is followed by the unavoidable bitterness.

Read More